Why evaluating security testing methodologies actually matters

When a project is young, security often sounds like something you’ll “bolt on later.” In reality, the way you approach testing from day one will quietly decide whether you’re shipping a resilient product or a ticking time bomb. Evaluating your project’s security testing methodologies is less about chasing buzzwords and more about checking if your current approach can realistically catch the kinds of attacks your system will face. Think of it as a health check for how you design, build and validate software, not just a one‑off scan. This beginner guide focuses on helping you understand what questions to ask, what signals to look for, and when a simple tool-based approach stops being enough and you need structured processes or external help. By the end, you should be able to tell whether your current testing strategy is shallow box‑ticking or a meaningful shield against real‑world threats.

Clarifying what “security testing methodologies” really are

Before you can evaluate anything, you need a concrete idea of what you’re evaluating. A security testing methodology is essentially a repeatable way of proving that your system behaves securely under expected and unexpected conditions. It’s broader than just running a scanner once a month. A methodology usually defines: what gets tested (assets, components, APIs), how it gets tested (manual checks, automation, threat modeling), how often, what standards it follows, and how findings get triaged and fixed. For example, penetration testing for web applications is only one slice of the picture; your methodology might also include code-level analysis, dependency checks, infrastructure reviews, and regular re‑testing after deployments. Evaluating yours means looking at this whole chain and asking: is it systematic, measurable, and aligned with the real risks to this particular project?

Core principles experts recommend for beginners

Security consultants and experienced engineering leaders tend to repeat a few core principles, regardless of company size or tech stack. First, testing must be risk‑driven: you test more deeply where a compromise would really hurt—payment flows, authentication, sensitive data paths. Second, methodologies must be repeatable; if they depend on a single “security hero,” they are fragile. Third, your approach should mix automation with thoughtful human review, because scanners are fast but blind to business logic flaws, while humans are slower but can reason about abuse cases. Finally, seasoned experts stress the importance of feedback loops. Every issue discovered in production, during audits, or by bug bounty hunters should feed back into your testing methodology so that the same weakness is caught earlier next time. If your current setup ignores these principles, you have clear areas for improvement.

Essential security testing tools you actually need

A beginner’s mistake is either to rely on one shiny scanner for everything, or to drown in overlapping security testing tools for software development without understanding what each category covers. A practical baseline for most projects includes at least four tool types. Static analysis tools scan source code or binaries for common weakness patterns before deployment. Software composition analysis checks third‑party libraries for known vulnerabilities. Dynamic scanners probe running applications for misconfigurations and exploitable behavior. Finally, specialized tools target infrastructure-as-code, containers, and cloud configuration. Experts recommend starting small but covering each layer: code, dependencies, runtime and environment. Over time, you can expand into fuzzing, advanced API testing and custom scripts that mimic your project’s unique behavior. The key evaluation question is not “do we own tools,” but “do our tools map to how and where we’re actually likely to be attacked?”

Recommended tool categories for beginners

To avoid paralysis by choice, treat tools as building blocks that fill specific gaps in your methodology, not as status symbols. Start by selecting one reliable product in each category, plug it into your delivery pipeline, and watch how many true positives vs. false alarms it generates over a few releases. This quickly reveals which tools offer real value for your particular architecture and which just create noisy reports nobody reads. Seasoned security engineers advise against chasing the latest trend if the basics—such as simple dependency scanning—are not consistently in place. If your stack is mostly web‑based, for instance, prioritize dynamic scanners and proxies that support realistic penetration testing for web applications, plus browser tools that let you see requests exactly as an attacker would. As your project matures, you can swap in more advanced or specialized solutions where they genuinely reduce risk.

Practical checklist of helpful tools

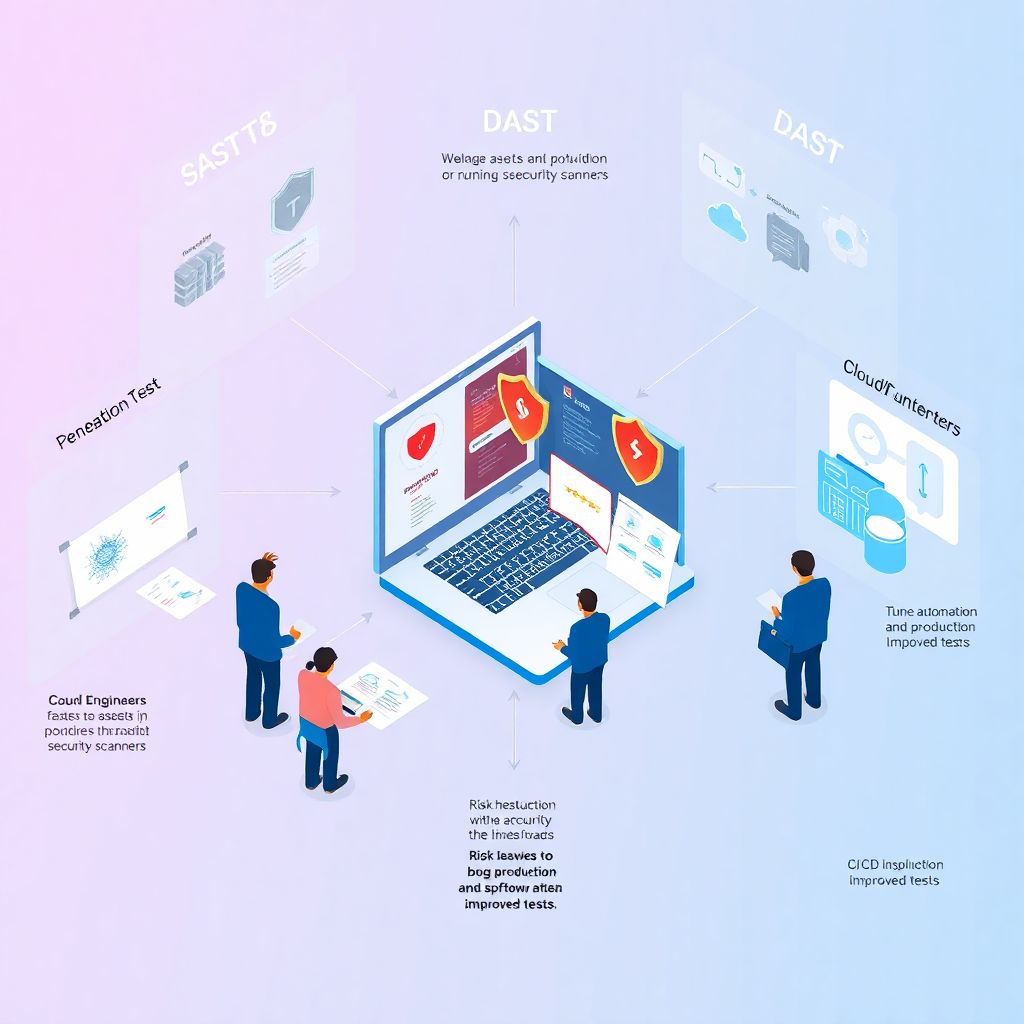

– Static Application Security Testing (SAST) scanner integrated into CI

– Software Composition Analysis (SCA) for dependencies and licenses

– Dynamic Application Security Testing (DAST) for running services

– Container and cloud configuration scanners for infrastructure

Step‑by‑step process to evaluate your current methodology

Instead of trying to judge your entire security posture in one sitting, break the evaluation into manageable steps. Begin by documenting what you already do, even if it seems informal: when do you run scans, who reviews the output, and how are issues tracked? Then compare this reality with what you thought your methodology was; mismatches highlight gaps in discipline or communication. Next, map your system: list critical components, data stores, key APIs and user journeys. This helps you see whether the most sensitive areas receive appropriate testing depth and frequency. Follow that by inspecting your tools, playbooks and escalation paths to understand whether they form a coherent process or just a pile of unconnected activities. At each step, ask not only “do we do this?” but “is it effective?” by checking metrics like time to remediate, recurring issues and production incidents.

Detailed evaluation steps with expert hints

Start with scope: security specialists insist that any methodology review must be grounded in an up‑to‑date asset inventory. If you are testing a subset of services that no longer handle the riskiest data, your strategy is misaligned. Once the inventory is clear, examine test coverage by layer: client, API, backend, data, infrastructure. For each, identify which tests are automated and which are manual. Experts suggest giving special attention to identity and access control flows, where subtle mistakes often slip through. Then evaluate timing: do tests run on each pull request, on every release candidate, or only quarterly? Long gaps between code changes and testing increase the chance of unpleasant surprises. Finally, review governance: who owns the methodology, reviews exceptions and approves risk acceptance? If ownership is fuzzy, that is usually where security debt quietly accumulates.

Step‑by‑step evaluation outline

– List assets, data flows and critical user journeys

– Document all current tests and when they run

– Map tools and manual processes to specific risks

– Review metrics: recurring issues, time to fix, incident history

Where third‑party services fit in your evaluation

At some stage, especially in growing organizations, internal efforts alone will not give you the depth and independence you need. This is where external providers come in, from on‑demand audits to fully managed security testing services for businesses that want continuous coverage without building a large in‑house team. When evaluating your methodology, ask whether and how you leverage these services. Are external testers used only as a compliance checkbox, or do their findings actually influence your internal routines and developer education? Also look at the alignment between provider capabilities and your architecture: if you rely heavily on APIs and microservices, choose partners familiar with those patterns, not just classic monolithic web apps. A mature methodology treats external partners as an extension of your own process, feeding fresh insights back into design and development.

Understanding VAPT and its role in your strategy

Many beginners encounter the term vulnerability assessment and penetration testing (VAPT) and treat it as a magical one‑size‑fits‑all solution. In practice, it is a useful but bounded component of a broader methodology. A vulnerability assessment focuses on breadth: scanning for known weaknesses, misconfigurations and missing patches. Penetration testing focuses on depth: simulating real attackers trying to chain issues into meaningful exploits. Evaluating your project’s approach means asking how often you perform each, what triggers them (time‑based, major releases, architecture changes), and whether findings are fed back into root‑cause analysis. Security experts generally advise that early‑stage projects at least run a basic VAPT before major launches, then increase frequency and sophistication as the user base and data sensitivity grow. If your only testing is a one‑off pentest from two years ago, your methodology is outdated by default.

Comparing in‑house approaches with application security testing services

Another decision point in your evaluation is whether pure in‑house testing is still adequate, or whether you should incorporate application security testing services from specialized vendors. These services can provide structured test plans aligned to standards like OWASP ASVS, threat modeling assistance, and repeatable regression testing on each big release. When you assess your methodology, look at skill distribution: do your developers and DevOps teams have enough security experience to design effective tests for complex features such as multi‑tenant access models, cryptographic flows or regulatory constraints? If not, borrowing expertise through targeted services can drastically raise the floor of your assurance. The trick is to avoid outsourcing thinking entirely. Internal staff should stay involved, use the results to sharpen their own practices, and gradually absorb patterns so that the organization becomes less dependent on external help over time.

Evaluating penetration testing for web applications specifically

For projects that interact with users through browsers or APIs, web application security deserves particular focus. Evaluating your methodology here involves more than checking that a pentest report exists. Inspect the depth of testing: are testers exploring business logic, authorization edge cases and abuse of legitimate features, or just running automated scanners against public endpoints? Expert testers will try scenarios like privilege escalation across tenants, abuse of forgotten admin endpoints and creative data poisoning in analytics or machine learning features. Look at how clearly reports explain the impact and exploitation steps; vague findings are harder for developers to prioritize and fix. Finally, review follow‑up: do you re‑test after critical fixes, and are lessons learned turned into automated tests, secure coding guidelines and review checklists? If not, you are paying for temporary visibility instead of durable improvement.

Using metrics and feedback loops to judge effectiveness

A security testing methodology is only as good as the outcomes it produces. To evaluate yours objectively, you need basic metrics and honest retrospectives. Start tracking the number of issues discovered before production versus after, grouping them by severity and root cause. If high‑impact issues keep surfacing from bug bounties or customer reports rather than your own tests, that is a strong signal your methodology is too shallow. Also monitor remediation times: long delays indicate either poor prioritization or friction in your development workflows. Experts suggest conducting brief post‑mortems for serious findings: what type of test should have caught this earlier, and how can you adjust tooling or process to do so? Over time, aim for a trend where severe vulnerabilities are increasingly found earlier in the lifecycle, and recurring patterns are gradually eliminated rather than patched repeatedly in isolation.

Troubleshooting common problems with your current approach

When beginners first evaluate their testing methodology, they often discover problems but feel unsure how to fix them. Low engagement from developers is a frequent issue; if reports are too noisy or abstract, teams will quietly ignore them. To troubleshoot this, tune tools to reduce false positives, prioritize findings with clear risk descriptions, and integrate results into the same ticketing systems engineers already use. Another common challenge is coverage gaps, where some services or environments almost never get tested. Solve this by defining minimal testing baselines per component type, then automating as much as possible so no system depends on manual remembrance. Finally, watch out for “once and done” testing mindsets. If pentests or scans do not trigger changes in coding practices, threat models or test suites, your methodology remains static while attackers and your own software evolve.

Typical issues and practical fixes

– Too many false positives: calibrate tools, disable noisy rules, and focus on exploitable patterns first

– Repeated vulnerabilities: introduce targeted training and secure code review checklists for problem areas

– Slow remediation: embed security champions in teams to help triage and negotiate realistic but tight deadlines

Aligning methodologies with secure development workflows

No matter how polished, security testing in isolation will underperform if it clashes with how your team actually builds software. A strong methodology is woven into your development process: tests run automatically in CI/CD, security gates are lightweight but meaningful, and developers see security feedback early, when fixes are cheaper. While reviewing your approach, trace a typical feature from idea to production and mark where security considerations appear. If they mostly show up at the very end as a “final scan,” refactor the process. Shift some testing left by adding pre‑commit hooks, static checks in CI and threat discussions during design reviews. At the same time, maintain right‑side controls like staging‑environment DAST and periodic manual reviews. Experts consistently emphasize that harmony between workflow and testing yields better results than any individual tool or external assessment could achieve on its own.

Putting it all together for a sustainable improvement plan

Evaluating a project’s security testing methodologies is not a grand audit you do once; it is a skill you refine. Start by understanding what you already do, map it to actual risks, and fill glaring gaps with focused tools and processes rather than random purchases. Then, where needed, augment your capabilities with targeted application security testing services or periodic VAPT engagements, making sure their insights genuinely reshape your internal practices. Keep an eye on metrics so you can see whether changes are moving the needle, and treat every serious finding as a chance to strengthen your methodology, not just to patch a bug. Over time, this conversational, feedback‑driven approach turns security from a mysterious expert domain into a routine part of how your team thinks about building and shipping software that users can trust.