Why evaluating risk‑reduction strategies matters more than a glossy risk register

Most teams think they “manage risk” once they’ve filled in a spreadsheet with colored cells. The uncomfortable truth: many of those risk-reduction strategies quietly fail, and nobody notices until a deadline collapses. Evaluating measures is less about bureaucracy and more about checking: “Is what we’re doing actually changing the odds?” This guide walks through a practical, step‑by‑step way to test your ideas, using simple metrics, real‑world cases и a bit of healthy skepticism вместо бесконечных презентаций.

Step 1. Start with the real question: what exactly are you trying to reduce?

Before numbers and dashboards, clarify which dimension of risk you’re targeting: probability, impact, detection speed, or all three. Many teams write, “Mitigate vendor risk,” but never say whether they want fewer incidents, shorter outages, или меньшие убытки. A precise goal might sound like: “Cut the likelihood of a critical supplier delay from 30% to 10% this quarter.” Without that clarity, even the best project risk management consulting services can’t tell whether a control is doing anything meaningful.

Case: The “mitigated” risk that never moved

A fintech startup added extra code reviews to “reduce security risk.” Six months later, they felt safer, but audit data showed the number of severe findings per release hadn’t changed. Why? They never defined what “better” meant. Once they reframed the goal as “halve the rate of critical vulnerabilities per release,” they realized their reviews focused on style, not risky logic. Same effort, wrong target. Evaluating measures started with a sharper definition, not with new tools.

Step 2. Turn fuzzy fears into measurable risk hypotheses

A useful risk-reduction strategy is a testable hypothesis: “If we do X, then probability/impact of Y will change from A to B.” This sounds formal, but in practice it’s a sanity check. For instance: “If we engage a second supplier, the chance of a stock‑out drops from 25% to under 5%.” Such statements keep discussions grounded. They also make it easier to work with enterprise risk assessment services for projects, because both sides can agree on what “success” will look like, not just on elegant slide decks.

Newbie mistake: confusing activity with effect

Novices often list actions—extra meetings, more documents, new tools—and call them “risk mitigation.” But activities are only proxies. The effect is the change in odds or damage. A weekly sync might reduce miscommunication risk, or it might just consume time. Always ask: “What metric would have to shift for this to be worth it?” If you can’t name one, the tactic is probably comforting noise, not a real risk‑reduction move, regardless of how official it looks.

Step 3. Pick simple, trackable indicators before you deploy

You don’t need a PhD or an army of analysts. Choose a small set of indicators that reflect your risk hypothesis: frequency of incidents, time to detection, rework rate, cost overrun percentage, customer escalation count. Decide now how often you’ll measure them and what “good enough” means. When using the best project risk management software, resist the urge to enable every dashboard. Start from questions like “Where are we bleeding?” and “How will we know we stopped?” and configure only those views that answer them clearly.

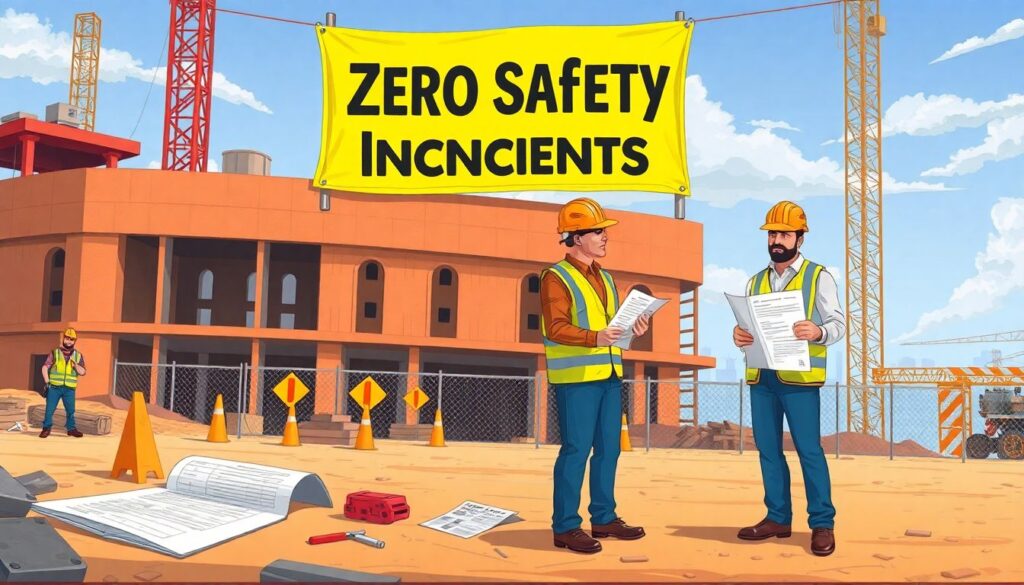

Case: Metrics that accidentally hid a looming failure

A construction project proudly reported “zero safety incidents” for months. Later investigation showed minor accidents weren’t logged because the form took 20 minutes to fill. The apparent improvement was a data-collection failure. When they shortened the report to 2 minutes and allowed verbal logging on site, incident numbers “worsened,” then truly dropped as new training took effect. Evaluating risk reduction required checking if indicators were honest, not just pretty.

Step 4. Run a baseline: how bad is it before any mitigation?

Teams skip this constantly—and sabotage their own evaluation. To know if a strategy works, you need a clear “before” picture. For two to four weeks, track your selected indicators without adding new controls. Capture near‑misses, small issues, and time lost to firefighting. If you’re already mid‑project, reconstruct a baseline from past logs, emails, and ticket histories. When you later bring in project risk mitigation strategy consulting, this baseline becomes the yardstick that separates feel‑good stories from measurable improvements.

Warning: retrofitting baselines is error‑prone

If you only start measuring after a major scare, it’s tempting to “estimate” how it used to be. Human memory is biased: people underreport old chaos and overstate recent pain. For critical projects, insist on light‑weight tracking from the start, even if metrics are imperfect. A rough baseline collected in real time beats a perfect baseline guessed six months later. This discipline turns your project into a learning system instead of a sequence of unrelated crises.

Step 5. Implement one major risk‑reduction change at a time

When trouble hits, leadership often launches a swarm of actions: new tools, extra approvals, emergency hires. Evaluating which one actually worked then becomes almost impossible. Wherever feasible, phase measures. Introduce early‑warning alerts this month, add extra reviews only next month. This staggered approach lets you see which intervention moves the needle. If you’re hiring a project risk management expert, align on a phased rollout plan rather than a huge “big‑bang” mitigation package that obscures causality.

Case: The over‑engineered “solution” to schedule risk

A media company facing delays adopted three ideas at once: a new planning tool, stricter change control, and daily stand‑ups. Delivery improved, but nobody knew why. When a later project skipped the stand‑ups, delays returned. Post‑mortem analysis showed the software was underused, and change control mostly rubber‑stamped. The real driver was fast daily communication. Had they phased changes, they would’ve learned this months earlier—and avoided paying for unused features in an advanced platform.

Step 6. Compare data: before vs. after, not hope vs. opinion

Once your strategy runs for a full cycle—say, a sprint, milestone, or month—compare numbers to your baseline. Look for shifts in both central metrics (average delay, average defect rate) and tails (extreme overruns, rare incidents). Don’t panic if the first measurements are noisy; risks are probabilistic by nature. What you’re after is a visible trend: fewer surprises, faster detection, less severe fallout. When working with project risk management consulting services, insist they help interpret variance, not just show colorful charts.

Newbie mistake: declaring victory after one calm week

A quiet period might mean your mitigation is working—or you just got lucky. For low‑frequency but high‑impact risks (like data breaches or major outages), you need a longer window and richer indicators. For example, count how many times a control actually triggered an alert, how many near‑misses were caught earlier, or how many vulnerabilities were removed before release. Single snapshots seduce; trend lines teach. Steady improvement over several cycles builds real confidence.

Step 7. Stress‑test your strategies with “failure rehearsals”

Numbers tell only part of the story. Complement them with scenario exercises: “What if this vendor collapses tomorrow?” Walk through your current controls and see where they break. In tech projects, run game days: deliberately disable a non‑critical component and see how monitoring, failover, and communication handle it. For complex portfolios, enterprise risk assessment services for projects often facilitate workshops where stakeholders simulate chain reactions. Weak points revealed in a rehearsal are inexpensive lessons; discovered in production, they’re headline material.

Case: Discovering the single point of failure nobody owned

An e‑commerce team believed they’d mitigated payment risk with “multiple gateways.” During a chaos drill, they disabled the primary gateway and learned the fallback credentials were expired, and no one knew who maintained them. The “mitigation” existed only on a slide. Fixing ownership, rotation, and periodic testing did more for risk reduction than any additional gateway. Evaluation here wasn’t theoretical—it was driven by a safe, simulated failure that exposed real‑world gaps.

Step 8. Factor in cost, friction, and side effects

A mitigation that technically works but paralyzes the team is not a win. Include implementation cost, maintenance effort, morale impact, and delays in your evaluation. If a control adds heavy approval layers, people will find shortcuts or simply stop reporting issues honestly. Effective strategies reduce overall project risk without overwhelming the project’s ability to deliver. When you work with project risk mitigation strategy consulting, challenge recommendations that raise governance overhead far beyond the risk they address.

Newbie tip: watch for “shadow systems” as a red flag

When controls are overly rigid, staff quietly create parallel processes: side spreadsheets, private Slack channels, unapproved tools. These shadow systems indicate friction, and they also create new, hidden risks. During evaluation, ask team members how they really get work done. If the official mitigation isn’t used or is bypassed, its perceived effectiveness is misleading. Designing smaller, lighter controls that people willingly adopt often reduces risk more than heavy frameworks nobody follows consistently.

Step 9. Use external help wisely: experts and tools as amplifiers

External support can speed up learning—but only if you stay in the driver’s seat. The best project risk management software helps you store assumptions, track indicators, and visualize trends, not decide your priorities. Likewise, hiring a project risk management expert makes sense when stakes are high or your team is inexperienced, but insist on knowledge transfer. Ask them to document hypotheses, explain trade‑offs, and design simple dashboards your team can maintain once consultants leave.

Case: Consulting that actually stuck

A pharmaceutical firm brought in a specialist after repeated clinical trial delays. Instead of delivering a thick report, the expert co‑designed lightweight risk reviews at each protocol change, identified three critical indicators, and trained project managers to run monthly “risk health checks.” Within a year, delay variance shrank, and the process ran without external help. The value wasn’t just new practices, but a culture of continuous evaluation that weekend‑proofed their future projects.

Step 10. Build a continuous feedback loop, not a one‑time audit

Risk landscapes shift: new regulations, staff turnover, unexpected dependencies. Treat your evaluation process as recurring: at set intervals, review which strategies still work, which generate friction, and which new threats emerged. Adjust hypotheses, retire obsolete controls, and add experiments where needed. Over time, this loop transforms risk management from a compliance checkbox into a practical learning mechanism that keeps projects resilient. The aim is not zero risk, but fewer nasty surprises and more informed decisions under uncertainty.

Quick recap: a compact checklist to use on your next project

1. Define the specific dimension of risk you want to cut.

2. Turn each mitigation into a clear, testable hypothesis.

3. Choose honest, lightweight indicators and record a baseline.

4. Roll out major changes in phases so impact is visible.

5. Compare trends over several cycles, not single weeks.

6. Stress‑test with scenarios or drills to expose weak spots.

7. Weigh costs and side effects, watching for shadow systems.

8. Use experts and tools as enablers, not crutches.

9. Revisit and refine strategies on a regular cadence.